What's the robot.txt file?

Web Robots (Crawlers, Web Wanderers or Spiders) are programs that traverse the Web automatically. Among many uses, search engines use them to index the web content. Robots.txt implements the REP (Robots Exclusion Protocol), which allows the web site administrator to define what parts of the site are off-limits to specific robot user agent names. Web administrators can Allow access to their web content and Disallow access to cgi, private and temporary directories, for example, if they do not want pages in those areas indexed

To learn more about robot.txt for Joomla!, pls take a look this post

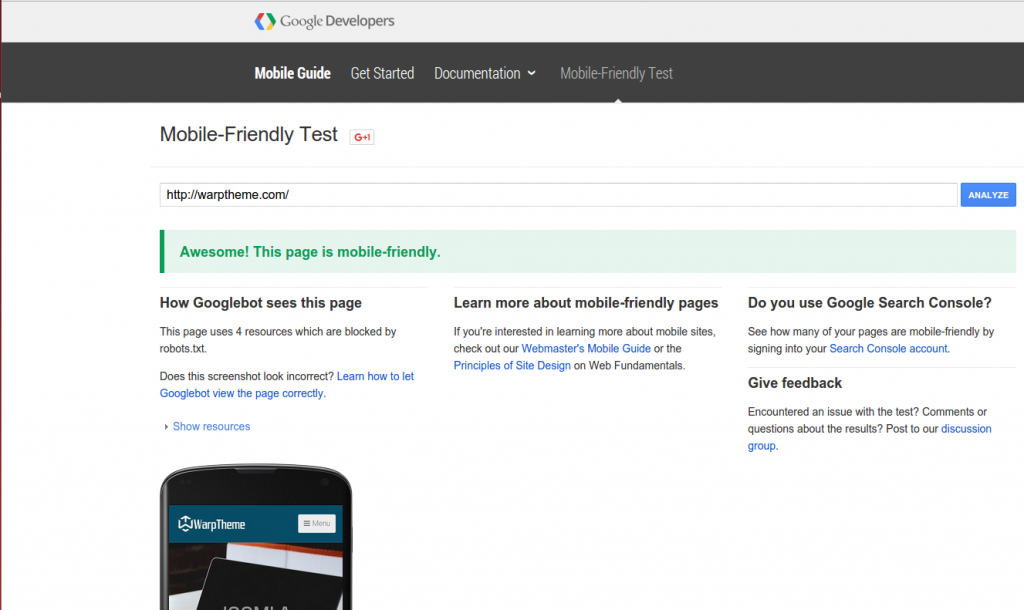

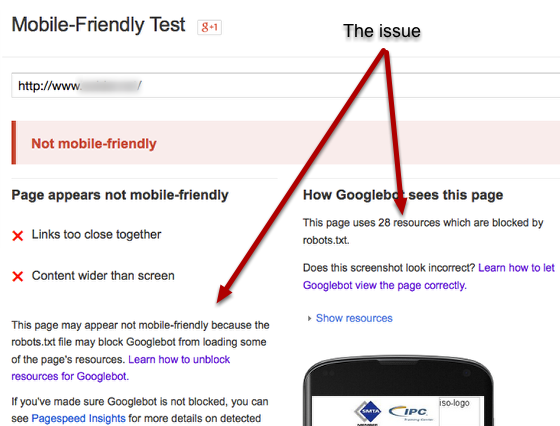

Last time, some Users have reported (via Joomla community forum) that their webpages are still not passing the Mobile Friendly Test.

What's the Problem?

The robots.txt file used in Joomla! blocks Google’s robots from accessing such directories as images or templates by default, preventing most of the resources from being loaded. To get around this issue, you’ll need to make a few minor changes to the file by deleting some lines:

Disallow: /images/

Disallow: /templates/Additionally, if you’re using modules/plugins that use their own unique CSS styles then you might need to delete these lines

Disallow: /modules/

Disallow: /plugins/If you having the same issue, simply contact us and we will help you fix it right away.